Spark Error: pyspark: line 45: python: command not found

I installed Spark 2.4.5 and on running the the ./pyspark from the bin directory of Spark 2.4.5 this error is coming. What is the reason behind this and what can be done to resolve this error? If you are beginner in Spark you might see this error on your machine. So, in this tutorial we are going to see the steps to solved this error.

The Apache Spark is powerful in-memory distributed processing engine for processing data in Big Data environment. Apache Spark is very popular framework for Big Data and it is being used in many Big Data application. For learning Apache Spark framework you have to install it on your computer, it is easy to install it on Ubuntu 18.04 or above operating system and staring learning this framework. If you are beginner you can get started at Apache Spark Framework programming tutorial.

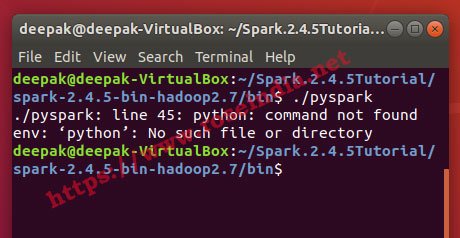

If you are starting and getting this error then it is easy to solve. This error is coming because un availability of python on the machine. So, if don't have python and you are trying to run the pyspark then you will get following error:

deepak@deepak-VirtualBox:~/Spark.2.4.5Tutorial/spark-2.4.5-bin-hadoop2.7/bin$ ./pyspark

./pyspark: line 45: python: command not found

env: ?python?: No such file or directory

deepak@deepak-VirtualBox:~/Spark.2.4.5Tutorial/spark-2.4.5-bin-hadoop2.7/bin$

Here is the screen shot of the error:

In order to fix this error we have to install Python 3.5 or above on the machine. In case of Ubuntu 18.04 you can install by following the steps given here.

Installing Python 3.6 in Ubuntu 18.04

We will install Python 3.6 on Ubuntu 18.04 with the help of apt utility which will connect to the Python 3.6 PPA for downloading the required packages. Python 3.6 is available in the Ubuntu 18.04 PPA and you can install it with following command:

sudo apt update

sudo apt install python3.6

Above code will install Python 3.6 on your computer. If you type following command on terminal it will display the python version:

deepak@deepak-VirtualBox:~$ python3 --version

Python 3.6.9

Now try to type python --version and see if you are able to access python 3.6 with python command. If you are able to find it then edit ~/.bashrc or ~/.bash_aliases file and add following content:

alias python=python3

export PYTHONPATH=$SPARK_HOME/python:$SPARK_HOME/python/lib/py4j-0.10.7-src.zip:$PYTHONPATH

export PYSPARK_PYTHON=python3

After this logout and login again. Then you should be able to access the python --version command from terminal.

Now we are all set. You can test the pyspark by going to the bin directory of Apache Spark and run following command:

spark-2.4.5-bin-hadoop2.7/bin$ ./pyspark

./pyspark: line 45: python: command not found

Python 3.6.9 (default, Apr 18 2020, 01:56:04)

[GCC 8.4.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

20/04/27 19:57:47 WARN Utils: Your hostname, deepak-VirtualBox resolves to a loopback address: 127.0.1.1; using 10.0.2.15 instead (on interface enp0s3)

20/04/27 19:57:47 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

20/04/27 19:57:48 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 2.4.5

/_/

Using Python version 3.6.9 (default, Apr 18 2020 01:56:04)

SparkSession available as 'spark'.

>>>

So, with the above help we are able to solve this.

You can check more tutorials at:

- What is Apache Spark Framework?

- Features and Benefits of Apache Spark

- Introduction to Apache Spark

- Apache Spark data structures

- Apache Spark Framework programming tutorial

- Spark Data Structure

- Apache Spark Scala Shell

- Why is Apache Spark So Hot?

- 5 Invincible Reasons to use Spark for Big Data Analytics?