Scala Shell in Spark - Step by step tutorial to run Scala in spark shell

After downloading and installing you can run the Scala shell that comes with the Apache Spark distribution for testing your Scala code. In this tutorial I will show you how to run the Scala shell that comes with the Apache Spark distribution and run your simple Scala code.

Apache Spark distribution comes with the spark-shell command line tool which is used to run Scala code interactively. You can run this tool and then write your Scala code in the command prompt of the tool to see the immediate output of your program. This way developer can test their Scala code while developing applications for Apache Spark.

The spark-shell is interactive tool which can be used by developers to quick test their Scala code before actually creating the jar file for testing/production deployment. In this section we will see the use of spark-shell for running Scala code interactively.

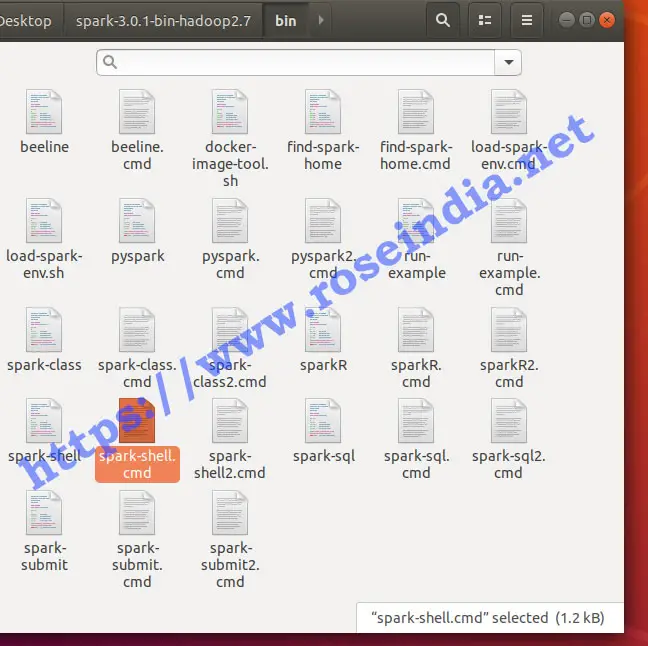

When you go to the bin directory of Apache Spark you will see following files:

In the bin directory you will find spark-shell.sh file which can be run from the terminal if you are using Linux System. On the Windows spark-shell.cmd can be run from command prompt which brings the Scala shell where you can write your Scala program.

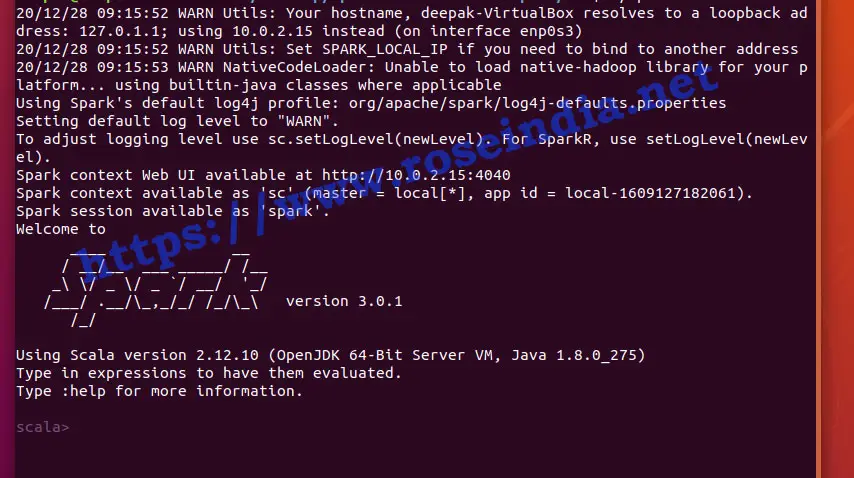

I am using Ubuntu 18.04, so, I will open terminal and go to the bin directory of Apache Spark and then run ./spark-shell.sh, this will bring terminal as shown below:

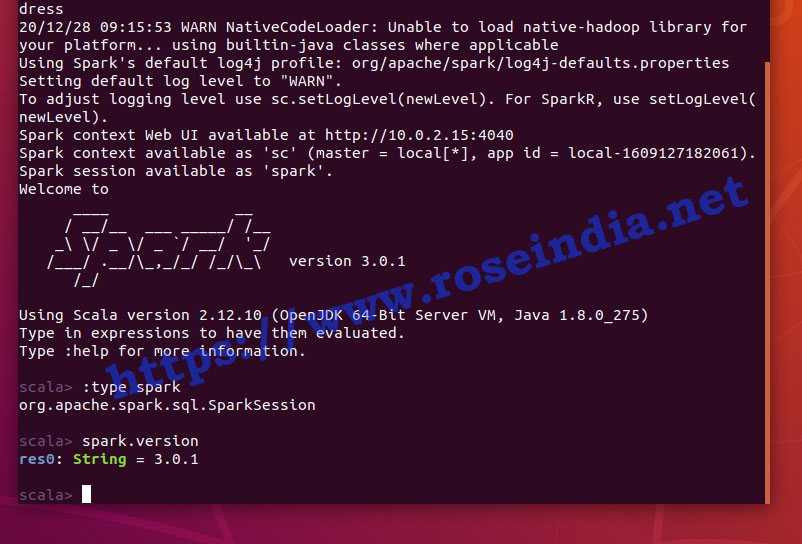

The spark-shell program is an extension of Scala REPL that comes with the Apache Spark distribution and it instantiates SparkSession as spark (and SparkContext as sc) on startup. This means you can use spark and sc on the spark-shell. To get type of spark you can run the following code:

scala> :type spark

and it will display following message:

scala> :type spark

org.apache.spark.sql.SparkSession

scala> spark.version

res0: String = 3.0.1

If you want to check the version of spark then you should run following code:

scala> spark.version

Above code displays following:

res0: String = 3.0.1

We are using Apache Spark version 3.0.1 and our code is displaying the correct version of Apache Spark.

Here is the screen shot of the program execution on Spark 3.0.1:

You may also try following code on the spark scala shell:

:imports

:type spark

:type sc

The :imports code gives you more details on the classes imported in the current session, while :type spark and :type sc code is used to get the class type of spark and sc respectively.

Finally to close the terminal you can type following:

:q

Above code will exit from spark-shell.

In this tutorial we learned to use the spark scala shell.